Aural Fields

Welcome to my Final Project/Dissertation for my master's degree in Game Development(Programming) at

Kingston University.

Being heavily inspired by Alexander Sannikov's implemention of Radiance Cascades to generate beautiful and not to forget, performant Global

Illumination and Vercidium's Raytraced Audio, I felt that an approach combining the two could be used to compute environment aware, spatial audio

using cascading ray-tracing.

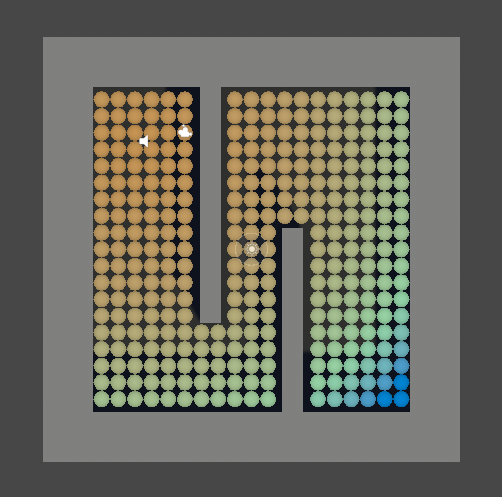

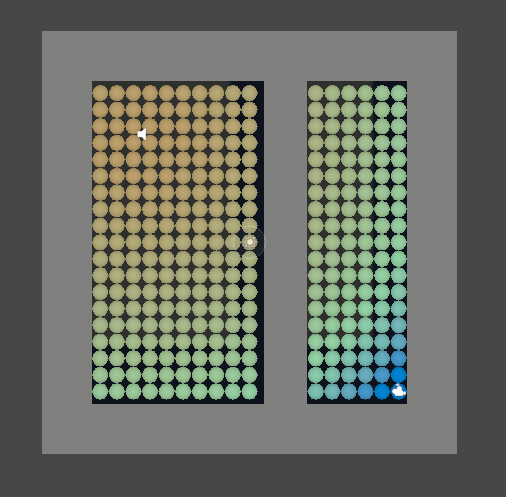

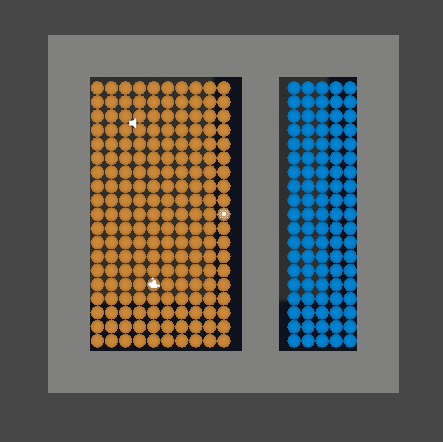

With Unity's spatial audio implementation, audio attenuation does not occur accurately to the

environment, as seen in the first set of images. Here, the speaker symbol is the audio source that is

playing a continous tone.

The colors refer to the intensity of the audio at that position, with redder colors being higher and

bluer being lower passing through a spectrum of intermediate colors.

Other than the falloff in intensity with distance, there is no attenuation with the environment as there

is a radial drop off in the intensities recorded.

To solve this, I am developing the Aural Fields method.

The method involves using a grid of "Aural Probes" to continuously perform spatial ray-tracing around

the environment to gather potential paths to audio sources.

With the grid in place, we can tap into the audio stream using the OnFilteredRead method to intercept

the current audion and manipulate the intensity as per required.

Once calculated, the audio listener then performs a bilinear interpolation sampling of its grid cell

vertices to apply the final attenuation to the audio source.

This provides for a very intuitive and organic audio experience.

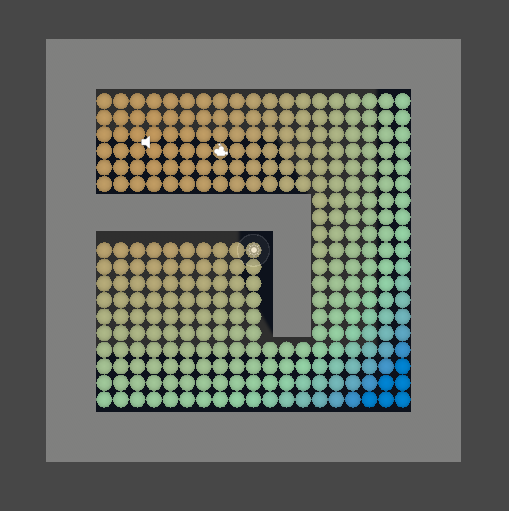

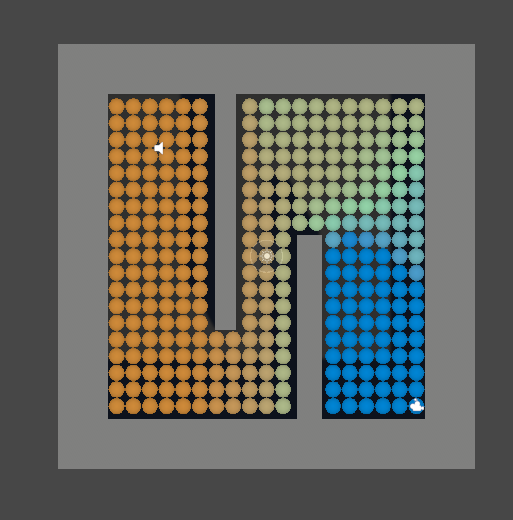

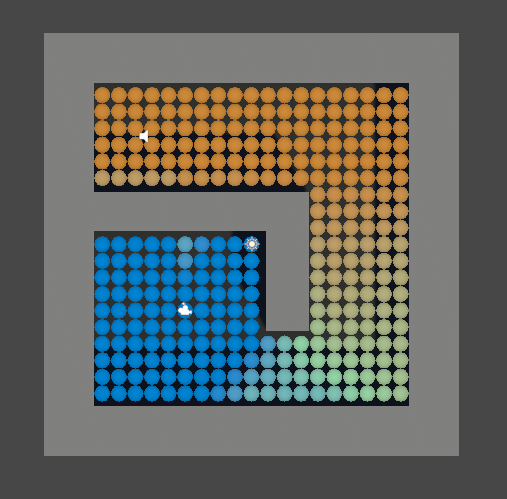

This is the resultant sound intensity profile with Aural Fields enabled.

Read the SIGGRAPH Asia paper here.

You can check out the project in it's current state on my github here.

I will be updating a lot more of the experimentation and inferences here soon, so stay tuned!